Imagine building a highway where every car has to stop at every toll booth to check if every other car paid. That’s what monolithic blockchains do with data. Every node downloads and verifies every single transaction-even if it doesn’t care about them. It works, but it’s slow. And expensive. Enter data availability layers-the quiet engine behind today’s most promising blockchain scaling solutions.

What Exactly Is a Data Availability Layer?

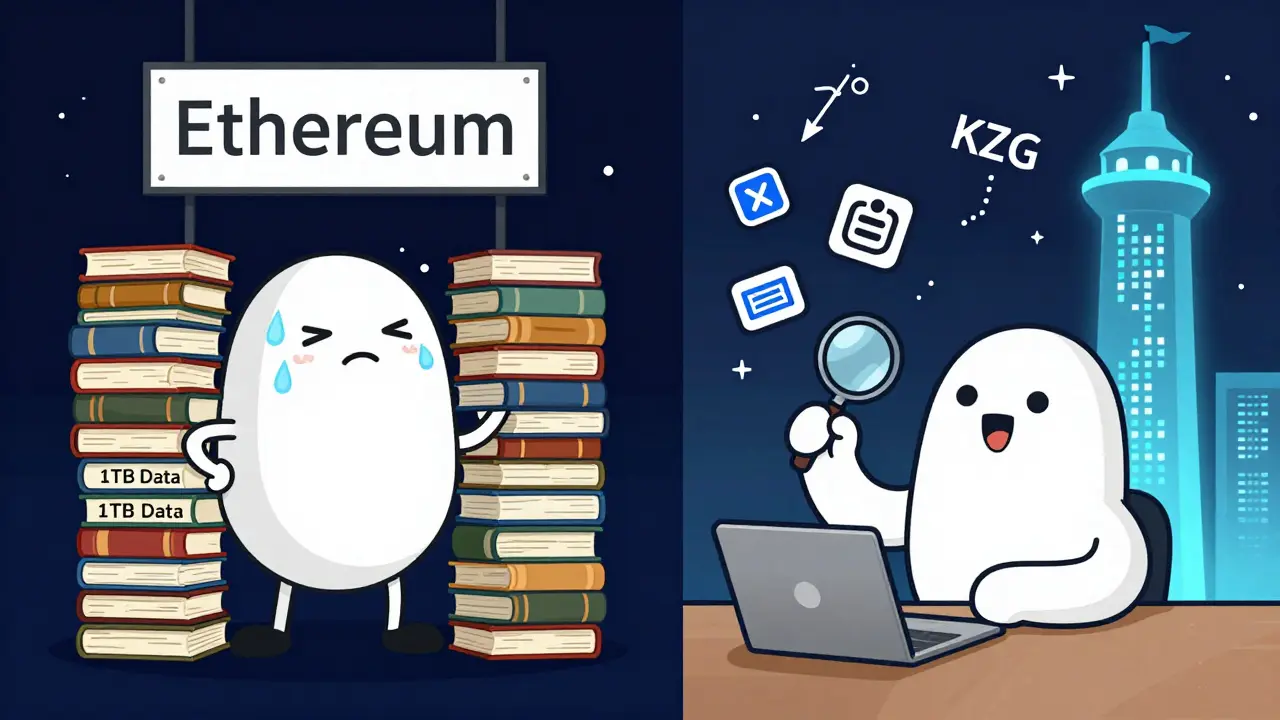

A data availability layer (DAL) is a dedicated system that stores raw transaction data and proves it’s publicly accessible. It doesn’t execute transactions. It doesn’t settle them. It just makes sure the data is there, and that anyone can check it without downloading the whole thing. Think of it like a public library that doesn’t lend books-but guarantees every page of every book was printed and filed correctly. Before DALs, blockchains like Bitcoin and Ethereum handled everything: consensus, execution, and data storage-all in one. That’s efficient for security but terrible for scale. Ethereum, for example, can only process 15-45 transactions per second on its main chain. Rollups-like Optimism and zkSync-can handle thousands. But they need somewhere to dump their transaction data without overwhelming the main chain. That’s where DALs come in.The Data Availability Problem

The core issue is simple: if a block producer hides part of the transaction data, no one can verify the chain’s state. They could steal funds, censor transactions, or break the whole system. Full nodes used to solve this by downloading everything. But light clients-like mobile wallets-can’t do that. They need a way to trust the data without storing it. Enter data availability sampling. Instead of downloading a whole 1 MB block, a light client randomly checks 30-40 small pieces. If those pieces are available, math says the rest almost certainly is too-with 99.9% confidence. This trick, first described in 2018 by Mustafa Al-Bassam, is what makes modular blockchains possible.How It Works: Erasure Coding, KZG, and Sampling

Three technologies make this work together:- Erasure coding-like adding extra copies of data. Celestia uses Reed-Solomon coding to double the data size. Even if half the pieces disappear, you can rebuild the whole thing.

- KZG polynomial commitments-a mathematical trick that lets you prove a piece of data belongs to a larger set without showing the whole set. Ethereum uses this to verify blob data efficiently.

- Data availability sampling-light clients randomly pick fragments. If they’re all there, the block is valid. No need to download everything.

Celestia vs. Ethereum: Two Paths, One Goal

There are two main approaches to data availability: dedicated layers and integrated layers. Celestia is the purest form. It does nothing but data availability. No smart contracts. No token transfers. Just storage and proof. It’s fast-300-500 transactions per second-and cheap. Its blocks are 1.25 MB, much bigger than Ethereum’s 90 KB. Light nodes need only 1-2 GB of storage. Full nodes? Still around 1 TB, but that’s fine-Celestia doesn’t force everyone to run one. Ethereum is going the other way. Instead of splitting off, it’s upgrading itself. The upcoming proto-danksharding (EIP-4844) will add “blobs”-special data containers that rollups can use. These blobs are cheaper to store than regular transaction data. Ethereum Foundation estimates this will cut rollup costs by 90%. But it’s still on-chain. That means Ethereum full nodes still have to store everything. And they still need to validate it. The trade-off? Celestia is simpler and faster, but it’s a separate chain. Ethereum is more complex, but you get its security and user base for free.

Emerging Players: EigenDA and Avail

Not all DALs are built the same. EigenDA uses Ethereum’s security but offloads data storage to a network of validators who stake ETH. It’s not a separate chain-it’s a data availability service running on top of Ethereum. In testnet, it hit 100,000 transactions per second with costs as low as $0.0001 per transaction. That’s 1,000x cheaper than Ethereum’s main chain. Then there’s Avail, built by the Polygon team. It’s a three-layer system: data availability, cross-chain interoperability (Nexus), and multi-token security (Fusion). It’s designed for enterprise use-think supply chains, banking, and government systems. Avail lets you plug in your own consensus rules and token economics, making it flexible but more complex to deploy.Performance and Real-World Numbers

Here’s how the top DALs stack up as of late 2023:| Layer | Throughput | Block Size | Cost per Tx | Storage per Light Node | Uptime |

|---|---|---|---|---|---|

| Celestia | 300-500 TPS | 1.25 MB | ~$0.001 | 1-2 GB | 99.98% |

| Ethereum (pre-danksharding) | 15-45 TPS | 90 KB | $1.23 | 1+ TB | 99.95% |

| Ethereum (post-danksharding) | ~100,000 TPS | 1.31 MB | ~$0.12 | 1+ TB | 99.95% |

| EigenDA | 100,000 TPS (testnet) | Variable | ~$0.0001 | 100 MB | 99.97% |

| Solana (monolithic) | 2,400 TPS | 1.5 MB | $0.00025 | 2+ TB | 99.2% |

Adoption and Ecosystem Growth

The market is exploding. In 2021, $25 million was invested in DALs. By 2022, it was $420 million. Messari predicts the market will hit $8.7 billion by 2027. Celestia has 15 active rollups. EigenDA is being tested by major DeFi protocols. Avail is already in use by Polygon’s enterprise clients. StarkWare’s StarkEx system, used by 2.3 million users, already uses a data availability committee (DAC)-a simpler version of a DAL. It’s not as decentralized as Celestia, but it’s proven in production. And it’s not just DeFi. The EU’s MiCA regulation, effective December 2024, requires all blockchain transactions to have verifiable data availability. That’s a huge push for DAL adoption in finance, logistics, and public services.

Challenges: Tooling, Complexity, and Interoperability

It’s not all smooth sailing. Developers say the biggest hurdle is tooling. Celestia’s GitHub has 47 open issues on data sampling. Ethereum’s danksharding repo has 123 issues on KZG integration. Most blockchain devs are trained on Ethereum’s EVM. Celestia uses Cosmos SDK. Only 12% of devs say they’re comfortable with it. Interoperability is another problem. Can a rollup on Celestia talk to one on EigenDA? Not yet. The Interchain Foundation just funded a $5 million project to fix that. And then there’s the human factor. A Blockworks survey of 150 developers found 68% saw better scalability with DALs-but 52% said tooling was holding them back. It’s like having a Ferrari with no steering wheel.What’s Next? The Road to 2026

Ethereum’s proto-danksharding drops in Q2 2024. That’s a turning point. It won’t be perfect, but it’ll be enough to push most rollups off the main chain and onto blobs. Celestia’s upcoming “Arbital” upgrade in Q1 2024 will add validity proofs-letting it verify not just that data is available, but that the state transitions are correct. That’s a step toward becoming a full execution layer. Gartner predicts that by 2026, 70% of new blockchain apps will use modular architectures with dedicated DALs. That’s up from 15% in 2023. Big players are betting big. Coinbase invested $50 million in Celestia. Binance Labs launched a $100 million fund for modular infrastructure. This isn’t a side project anymore. It’s the future.Should You Care?

If you’re a developer building on Ethereum, you already are. Rollups are your future. And rollups need data availability. Whether you use Ethereum’s blobs or a dedicated layer like Celestia, you’re using a DAL. If you’re a user, you won’t see it. But you’ll feel it. Lower fees. Faster transactions. Fewer network outages. If you’re an investor, this is the infrastructure layer of the next crypto cycle. Not the apps. Not the tokens. The plumbing. The data availability layer isn’t flashy. It doesn’t have NFTs or meme coins. But without it, modular blockchains don’t work. And without modular blockchains, crypto doesn’t scale. It’s the quiet foundation. And it’s here to stay.What is the main purpose of a data availability layer?

The main purpose of a data availability layer is to ensure that all transaction data published in a blockchain block is publicly accessible and cryptographically verifiable-without requiring every node to download and store the entire dataset. This enables scaling solutions like rollups to process more transactions while maintaining security through techniques like data availability sampling.

How does data availability sampling work?

Data availability sampling allows light clients (like mobile wallets) to verify that transaction data is available without downloading the full block. Instead, they randomly sample 30-40 small pieces of data. If those pieces are confirmed to be available, mathematical guarantees (based on erasure coding) show the rest of the data is likely available too-with 99.9% confidence. This makes verification fast and cheap.

Is Celestia better than Ethereum for data availability?

It depends on your needs. Celestia is faster, cheaper, and designed only for data availability, making it ideal for rollups that want maximum scalability and low costs. Ethereum’s upcoming danksharding offers integration with its existing ecosystem, security, and user base, but still requires full nodes to store all data. Celestia wins on efficiency; Ethereum wins on adoption and trust.

Can I use a data availability layer without knowing how it works?

Yes. Most users interact with DALs indirectly through rollups like Arbitrum, Optimism, or zkSync. You’ll see lower fees and faster transactions, but you won’t need to interact with the DAL directly. Only developers building on top of rollups need to understand the underlying data layer.

Why do some blockchains still have outages if they use data availability layers?

Data availability layers solve one problem-data accessibility. They don’t fix execution bugs, consensus failures, or network congestion. Blockchains like Solana still have outages because their execution layer is overloaded or buggy. Dedicated DALs like Celestia avoid this by separating concerns: data is stored reliably, while execution is handled by separate rollups.

What’s the biggest risk with data availability layers?

The biggest risk is over-reliance on sampling assumptions. If the number of samples is too low, or if network conditions are adversarial (like a coordinated attack), an attacker might hide data without being caught. Researchers stress that parameter choices-like how many samples to take-must be carefully tuned. This is why Ethereum’s implementation is still being tested, and why projects like Celestia are constantly refining their security models.

Are data availability layers only for Ethereum and Solana?

No. While Ethereum and Celestia are the most talked-about, DALs are a general architectural pattern. Avail (by Polygon), EigenDA, and even DAC-based systems like StarkEx all use variations of data availability. Any blockchain aiming for high throughput without sacrificing decentralization can benefit from a dedicated or integrated data availability layer.