When you're managing financial records, clinical trial data, or global supply chain logs, a single wrong number can cost millions. That’s why enterprises using Oracle’s cloud platforms don’t just collect data-they verify it. Oracle’s data verification methods aren’t just tools. They’re precision systems built to catch errors before they ripple through critical operations. And in an era where blockchain demands immutable, trustworthy data, these methods are more relevant than ever.

How Oracle Verifies Data: The Core Mechanics

Oracle doesn’t guess whether data is right. It checks. Every time. At the heart of this process is Oracle Enterprise Data Quality (EDQ), a system that compares incoming data against trusted reference sources. Think of it like a spellchecker for databases-but far more powerful.

For example, when an address is entered into Oracle Fusion Cloud Applications, EDQ doesn’t just look for typos. It cross-references it with global postal databases, geocodes it to exact coordinates, and flags inconsistencies. The system returns one of 11 precise status codes:

- 0: Verified Exact Match

- 1: Verified Multiple Matches

- 2: Verified Matched to Parent

- 3: Verified Small Change

- 4: Verified Large Change

- 5: Added

- 6: Identified No Change

- 7: Identified Small Change

- 8: Identified Large Change

- 9: Empty

- 10: Unrecognized

Each code tells you exactly what happened. Was it a typo? A duplicate? A completely new entry? This level of detail lets teams fix problems fast, not guess at them.

On top of that, Oracle uses an AccuracyCode system: V (Verified), P (Partially Verified), U (Unverified), A (Ambiguous), and C (Conflict). These aren’t just labels-they’re decision triggers. If a record shows ‘C’ for Conflict, the system flags it for human review. No automation skips over contradictions.

Why This Matters for Blockchain

Blockchain is built on trust. But trust isn’t automatic. If bad data goes into a blockchain, it becomes part of the ledger-forever. That’s why Oracle’s verification methods are critical for blockchain integrations.

Oracle’s upcoming Clinical One 24C release, scheduled for Q3 2024, will introduce blockchain-based verification trails for clinical trial data. This means every lab result, patient dosage, or test outcome gets verified by Oracle’s system before being written to the chain. No guesswork. No manual entry. Just verified, timestamped, immutable records.

It’s the same for financial transactions. Bank of America’s 2023 implementation of Oracle Banking Transaction Verification cut payment errors by 75%. How? By validating API headers, endpoint signatures, and transaction amounts in real time before they hit their blockchain-backed reconciliation layer. Competitors like Informatica can connect to more systems, but Oracle’s verification is baked into the flow-no plug-ins needed.

Industry-Specific Verification: Healthcare, Finance, Manufacturing

Oracle doesn’t use one-size-fits-all rules. It tailors verification to the industry.

In healthcare, Oracle Clinical One’s Source Data Verification (SDV) only checks critical variables in clinical trials-like drug dosages or adverse events. It skips routine data. This cuts verification effort by 60-70%, according to Pfizer’s 2022 case study. One hospital abandoned SDV after six months because it couldn’t handle non-standard patient formats. But that’s not a flaw in Oracle-it’s a warning: verification only works if your data structure matches the system’s expectations.

In finance, Oracle’s Banking Transaction Verification checks every request header and endpoint. It doesn’t just verify the amount-it verifies who sent it, where it came from, and whether the system that sent it is authorized. This is vital for compliance with SEC Rule 17a-4(f), which now requires stricter audit trails for transaction data.

Manufacturers use it to match inventory logs from factories to warehouse systems. A mismatch in serial numbers or batch IDs triggers an alert before products ship. Oracle claims 99.98% accuracy in these reconciliations based on internal case studies.

What Goes Wrong-and Why

Even the best systems fail if you don’t set them up right.

One common mistake: trying to validate data before it’s been extracted. Oracle’s documentation warns that validating pre-extract data gives false results. That’s not a bug-it’s a logic error. The system only compares data after it’s been pulled from the source. Start too early, and you’re checking ghosts.

Another issue: international addresses. Oracle’s Address Verification works well for North America and Europe (85-90% accuracy) but drops below 75% in parts of Southeast Asia, Africa, and Latin America. Local providers like Loqate outperform it there. If you’re managing global supply chains, you need to layer in regional validation tools.

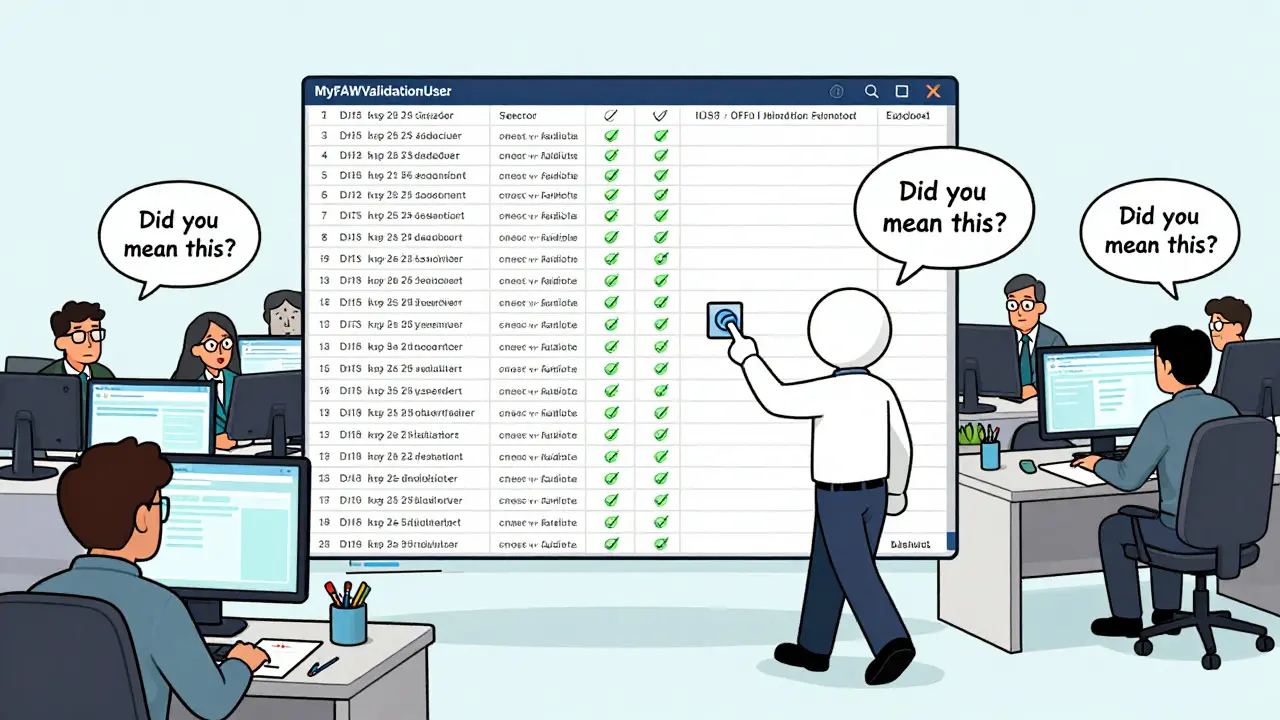

Then there’s credential management. Oracle requires a dedicated validation user-usually named ‘MyFAWValidationUser’-with identical privileges across Oracle Fusion Data Intelligence and Oracle Cloud Applications. No special characters. No spaces. Many companies spent months fixing security policies just to get this working. One Reddit user called it a “nightmare for IAM teams.”

Data type mismatches cause 38% of implementation failures. A date stored as text in one system and as a timestamp in another? The system flags it as an error. The fix? Custom SQL transformations. It’s not plug-and-play. You need someone who understands both the data and the tool.

How to Get Started

If you’re implementing Oracle’s data verification, here’s what actually works:

- Create the validation user: ‘MyFAWValidationUser’ with a simple password (no symbols or spaces).

- Grant identical privileges in both Oracle Fusion Data Intelligence and your Oracle Cloud Apps environment.

- Go to Console > Data Validation > Source Credentials and set up your data sources.

- Build your first validation set: pick the subject area (e.g., Customer Addresses), choose the metrics (e.g., count of mismatches), and select the columns to compare.

- Run a test on a small dataset first-don’t jump to 1 million records.

- Use the 11 status codes to build dashboards. Train your team to read them like a report card.

Oracle University recommends 40-60 hours of training for analysts to become proficient. That’s not optional. The system’s power lies in its detail-and detail requires understanding.

How Oracle Compares to the Competition

Informatica supports over 150 data connectors. Oracle supports about 50. That’s a clear gap. But here’s the catch: if you’re already on Oracle Cloud, 95% of Oracle’s validation sets work out of the box with zero configuration. Informatica needs custom mapping for almost everything.

Gartner calls Oracle a ‘Challenger’ in data quality tools-not a leader. Why? Because it’s locked into its own ecosystem. Forrester gave Oracle 4.2/5 stars for address verification but slammed the learning curve. And experts like Thomas Redman say the status codes are brilliant… if you know how to read them.

Bottom line: if you’re all-in on Oracle Cloud, their verification tools are the most seamless option. If you’re using SAP, Snowflake, or AWS, you’ll hit walls.

The Future: AI, Blockchain, and Predictive Quality

Oracle isn’t resting. Its May 2024 update to Fusion Data Intelligence added AI that suggests fixes for 65% of common validation errors. That’s huge. Instead of humans hunting down mismatches, the system says: “This date is off by two days-did you mean this one?”

The next step? Generative AI that writes its own validation rules. Oracle’s roadmap shows this coming in 2025. Imagine telling the system: “Verify all purchase orders over $50K against vendor history.” And it builds the logic automatically.

And yes-blockchain is part of the future. Oracle is embedding verification trails directly into blockchain ledgers. No more trusting third-party oracles. The data is verified before it’s written. That’s the endgame: data integrity built in, not bolted on.

Real Results, Real Risks

Companies using Oracle’s verification methods report:

- Up to 90% fewer data errors (Oracle’s internal benchmark)

- 20+ hours saved weekly on reconciliation (Gartner user review)

- 75% fewer payment errors at Bank of America

- 40% faster query resolution in clinical trials (Pfizer)

But the risks are real:

- 32% of users with non-Oracle data warehouses struggle with integration

- 42% complain about inconsistent error messages

- 6 months of failed implementation at a healthcare provider due to poor data structure

Oracle’s tools are powerful-but they’re not magic. They require clean data, trained staff, and the right use case. Use them right, and they become your most reliable asset. Use them wrong, and they become another cost center.

What is the main purpose of Oracle Data Verification Methods?

The main purpose is to ensure data accuracy, consistency, and integrity by comparing incoming data against trusted reference sources. These methods catch errors before they enter enterprise systems, reduce compliance risks, and improve decision-making-especially critical in regulated industries like healthcare and finance.

How does Oracle verify addresses?

Oracle uses its Address Verification processor (AV), which cross-references addresses with global postal databases. It returns one of 11 status codes indicating whether the address was verified exactly, matched to a parent, had a small or large change, or was unrecognized. It also geocodes addresses to exact coordinates and supports multi-country searches.

Can Oracle verify data on non-Oracle systems?

Yes, but with limitations. Oracle supports around 50+ data sources, mostly Oracle Cloud applications. While it can connect to external systems like SQL Server or Snowflake, integration is less seamless than with Informatica or Talend. Users report integration challenges in 32% of cases when using non-Oracle data warehouses.

What are the 11 Oracle verification status codes?

The 11 status codes are: 0 (Verified Exact Match), 1 (Verified Multiple Matches), 2 (Verified Matched to Parent), 3 (Verified Small Change), 4 (Verified Large Change), 5 (Added), 6 (Identified No Change), 7 (Identified Small Change), 8 (Identified Large Change), 9 (Empty), and 10 (Unrecognized). Each code tells you exactly what happened to the data during validation.

Why is the validation user named 'MyFAWValidationUser'?

It’s a convention Oracle recommends to ensure consistency across environments. This user must have identical privileges in both Oracle Fusion Data Intelligence and Oracle Fusion Cloud Applications. The name isn’t mandatory, but using it avoids confusion during setup and audits.

Is Oracle’s data verification compatible with blockchain?

Yes. Oracle is embedding its verification system directly into blockchain ledgers. The upcoming Clinical One 24C release will use blockchain-based verification trails for clinical trial data, ensuring every entry is validated before being written. This eliminates the need for external oracles and strengthens data integrity on-chain.

How accurate is Oracle’s data verification?

Oracle claims up to 90% reduction in data errors. For financial reconciliations, accuracy reaches 99.98%. Address verification is 85-90% accurate in North America and Europe but drops below 75% in some international regions. Accuracy depends heavily on data quality at the source and proper configuration.

What’s the biggest mistake people make when using Oracle verification?

Trying to validate data before it’s been extracted from the source system. Oracle’s system only compares data after it’s pulled into the pipeline. Validating pre-extract data gives false results. Always start validation from the initial extract date as defined in your pipeline settings.

Do I need special training to use Oracle’s verification tools?

Yes. Oracle University recommends 40-60 hours of training for data analysts to configure custom validation sets and interpret the 11 status codes correctly. The system is powerful but complex-without training, users often misread results or misconfigure rules.

What’s next for Oracle Data Verification?

Oracle plans to add generative AI in 2025 to automatically generate validation rules from natural language prompts. It’s also expanding support for non-Oracle data sources and deepening blockchain integration. The goal is to shift from reactive error-checking to predictive data quality-anticipating problems before they happen.